- cross-posted to:

- [email protected]

- [email protected]

- cross-posted to:

- [email protected]

- [email protected]

Google rolled out AI overviews across the United States this month, exposing its flagship product to the hallucinations of large language models.

They’re not hallucinations. People are getting very sloppy with terminology. Google’s AI is summarizing the content of web pages that search is returning, if there’s weird stuff in there then that shows up in the summary.

You’re right that they arent hallucinations.

The current issue isn’t just summarized web page, its that the model for gemini is all of reddit. And because it only fakes understanding context, it takes shitposts as truth.

The trap is that reddit is maybe 5% useful content and the rest is shitposts and porn.

And a lot of that content is probably an AI generated hallucination.

Most of what I’ve seen in the news so far is due to content based on shitposts from reddit, which is even funnier imo

I do dislike when the “actual news” starts bringing up social media reactions. Can you imagine a whole show based on the Twitter burns of this week? … it would probably be very popular. 😭

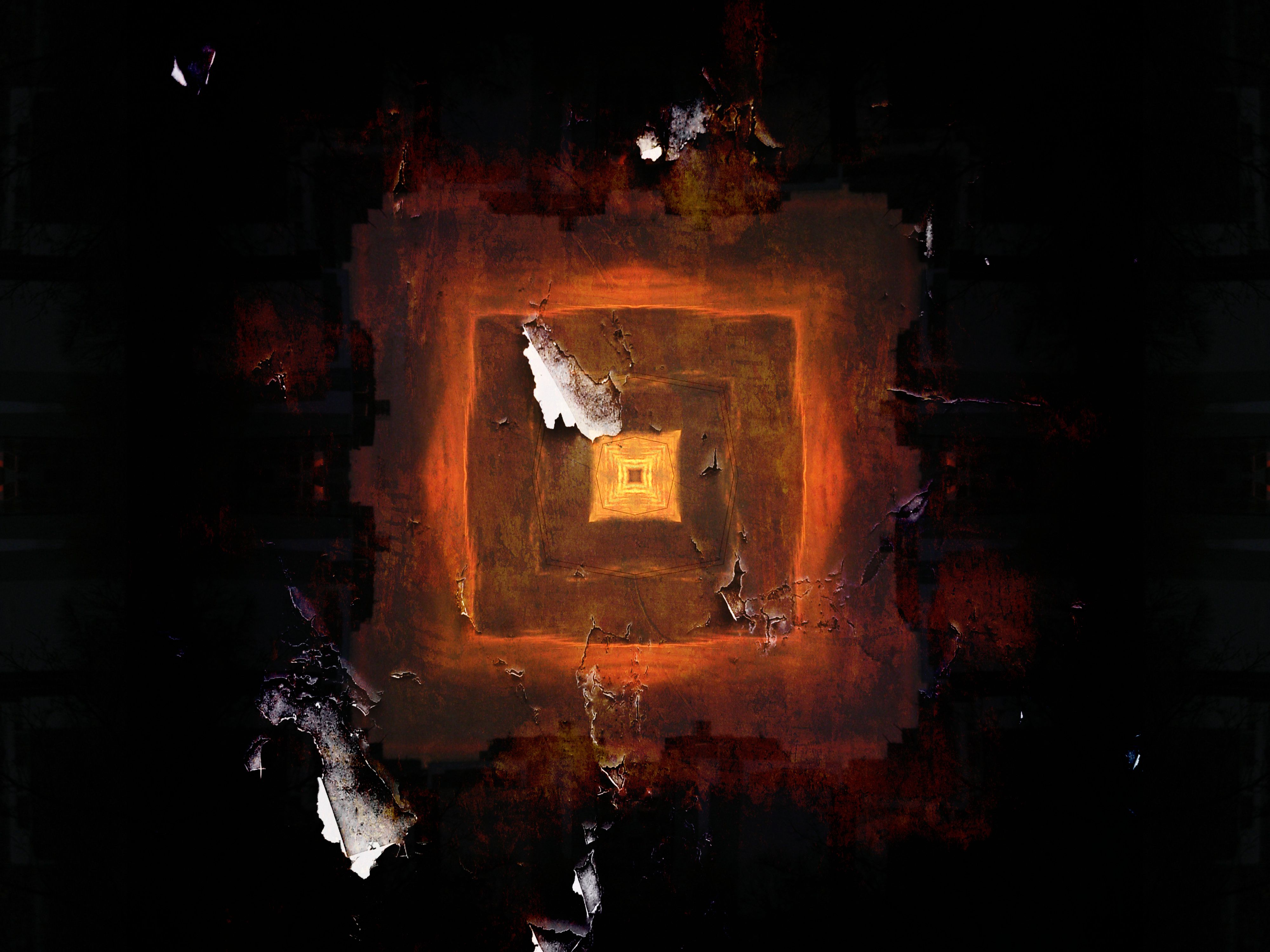

Absolutely. I wrote about this a while back in an essay:

Prime and Mash / Kuru

Basically likening it to a prion disease like Kuru, which humans get from eating the infected brains of other humans.

Anyone who puts something in their coffee, makes it not coffee, and should try another caffeinated beverage!!

Removed by mod

Yes, but the AI isn’t generating a response containing false information. It is accurately summarizing the information it was given by the search result. The search result does contain false information, but the AI has no way to know that.

If you tell an AI “Socks are edible. Create a recipe for me that includes socks.” And the AI goes ahead and makes a recipe for sock souffle, that’s not a hallucination and the AI has not failed. All these people reacting in astonishment are completely misunderstanding what’s going on here. The AI was told to summarize the search results it was given and it did so.

Removed by mod

“Hallucination” is a technical term in machine learning. These are not hallucinations.

It’s like being annoyed by mosquitos and so going to a store to ask for bird repellant. Mosquitos are not birds, despite sharing some characteristics, so trying to fight off birds isn’t going to help you.

Removed by mod

No, my example is literally telling the AI that socks are edible and then asking it for a recipe.

In your quoted text:

Emphasis added. The provided source in this case would be telling the AI that socks are edible, and so if it generates a recipe for how to cook socks the output is faithful to the provided source.

A hallucination is when you train the AI with a certain set of facts in its training data and then its output makes up new facts that were not in that training data. For example if I’d trained an AI on a bunch of recipes, none of which included socks, and then I asked it for a recipe and it gave me one with socks in it then that would be a hallucination. The sock recipe came out of nowhere, I didn’t tell it to make it up, it didn’t glean it from any other source.

In this specific case what’s going on is that the user does a websearch for something, the search engine comes up with some web pages that it thinks are relevant, and then the content of those pages is shown to the AI and it is told “write a short summary of this material.” When the content that the AI is being shown literally has a recipe for socks in it (or glue-based pizza sauce, in the real-life example that everyone’s going on about) then the AI is not hallucinating when it gives you that recipe. It is generating a grounded and faithful summary of the information that it was provided with.

The problem is not the AI here. The problem is that you’re giving it wrong information, and then blaming it when it accurately uses the information that it was given.

Removed by mod

Because that’s exactly what happened here. When someone Googles “how can I make my cheese stick to my pizza better?” Google does a web search that comes up with various relevant pages. One of the pages has some information in it that includes the suggestion to use glue in your pizza sauce. The Google Overview AI is then handed the text of that page and told “write a short summary of this information.” And the Overview AI does so, accurately and without hallucination.

“Hallucination” is a technical term in LLM parliance. It means something specific, and the thing that’s happening here does not fit that definition. So the fact that my socks example is not a hallucination is exactly my point. This is the same thing that’s happening with Google Overview, which is also not a hallucination.

Ppl anthropomorphise LLMs way too much. I get it that at first glance they sound like a living being, human even and it’s exciting but we had some time already to know it’s just very cool big data processing algo.

It’s like boomers asking me what is computer doing and referring to computer as a person it makes me wonder will I be as confused as them when I am old?

Oh, hi, second coming of Edgar Dijkstra.

He may think like that when using language like that. You might think like that. The bulk of programmers doesn’t. Also I strongly object the dissing of operational semantics. Really dig that handwriting though, well-rounded lecturer’s hand.

Don’t say those things to me. I have special snowflake disorder. I got literally high reading this when seeing a famous intelligent person has same opinion as me. Great minds… god see what you have done.

It’s only going to get worse now that ChatGPT has a realistic-sounding voice with simulated emotions.

Removed by mod

LLMs do sometimes hallucinate even when giving summaries. I.e. they put things in the summaries that were not in the source material. Bing did this often the last time I tried it. In my experience, LLMs seem to do very poorly when their context is large (e.g. when “reading” large or multiple articles). With ChatGPT, it’s output seems more likely to be factually correct when it just generates “facts” from it’s model instead of “browsing” and adding articles to its context.

I asked ChatGPT who I was not too long ago. I have a unique name and I have many sources on the internet with my name on it (I’m not famous, but I’ve done a lot of stuff) and it made up a multi-paragraph biography of me that was entirely false.

I would sure as hell call that a hallucination because there is no question it was trained on my name if it was trained on the internet in general but it got it entirely wrong.

Curiously, now it says it doesn’t recognize my name at all.

Sad how this comment gets downvoted, despite making a reasonable argument.

This comment section appears deeply partisan: If you say something along the lines of “Boo Google, AI is bad”, you get upvotes. And if you do not, you find yourself in the other camp. Which gets downvoted.

The actual quality of the comment, like this one, which states a clever observation, doesn’t seem to matter.