- cross-posted to:

- [email protected]

- cross-posted to:

- [email protected]

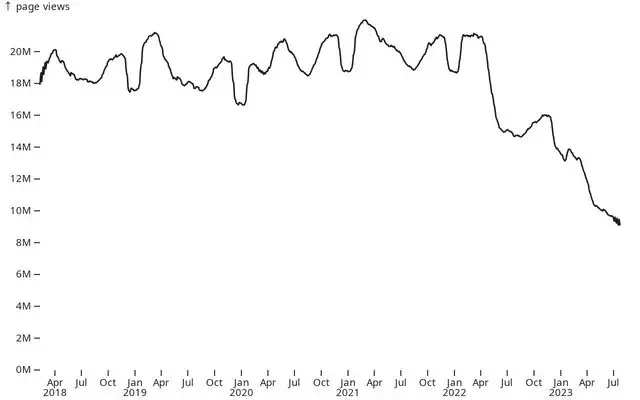

Over the past one and a half years, Stack Overflow has lost around 50% of its traffic. This decline is similarly reflected in site usage, with approximately a 50% decrease in the number of questions and answers, as well as the number of votes these posts receive.

The charts below show the usage represented by a moving average of 49 days.

What happened?

There’s a bunch of people telling you “ChatGPT helps me when I have coding problems.” And you’re responding “No it doesn’t.”

Your analogy is eloquent and easy to grasp and also wrong.

Fair point, and thank you. Let me clarify a bit.

It wasn’t my intention to say ChatGPT isn’t helpful. I’ve heard stories of people using it to great effect, but I’ve also heard stories of people who had it return the same non-solutions they had already found and dismissed. Just like any tool, actually…

I was just pointing out that it is functionally similar to scanning SO, tech docs, Slashdot, Reddit, and other sources looking for an answer to our question. ChatGPT doesn’t have a magical source of knowledge that we collectively also do not have – it just has speed and a lot processing power. We all still have to verify the answers it gives, just like we would anything from SO.

My last sentence was rushed, not 100% accurate, and shows some of my prejudices about ChatGPT. I think ChatGPT works best when it is treated like a rubber duck – give it your problem, ask it for input, but then use that as a prompt to spur your own learning and further discovery. Don’t use it to replace your own thinking and learning.

Even if ChatGPT is giving exactly the same quality of answer as you can get out of Stack Overflow, it gives it to you much more quickly and pieces together multiple answers into a script you can copy and work with immediately. And it’s polite when doing so, and will amend and refine its answers immediately for you if you engage it in some back-and-forth dialogue. That makes it better than Stack Overflow and not functionally similar.

I’ve done plenty of rubber duck programming before, and it’s nothing like working with ChatGPT. The rubber duck never writes code for me. It never gives me new information that I didn’t already know. Even though sometimes the information ChatGPT gives me is wrong, that’s still far better than just mutely staring back at me like a rubber duck does. A rubber duck teaches me nothing.

“Verifying” the answer given by ChatGPT can be as simple as just going ahead and running it. I can’t think of anything simpler than that, you’re going to have to run the code eventually anyway. Even if I was the world’s greatest expert on something, if I wrote some code to do a thing I would then run it to see if it worked rather than just pushing it to master and expecting everything to be fine.

This doesn’t “replace your own thinking and learning” any more than copying and pasting a bit of code out of Stack Overflow does. Indeed, it’s much easier to learn from ChatGPT because you can ask it “what does that line with the angle brackets do?” or “Could you add some comments to the loop explaining all the steps” or whatever and it’ll immediately comply.