- cross-posted to:

- [email protected]

- [email protected]

- [email protected]

- cross-posted to:

- [email protected]

- [email protected]

- [email protected]

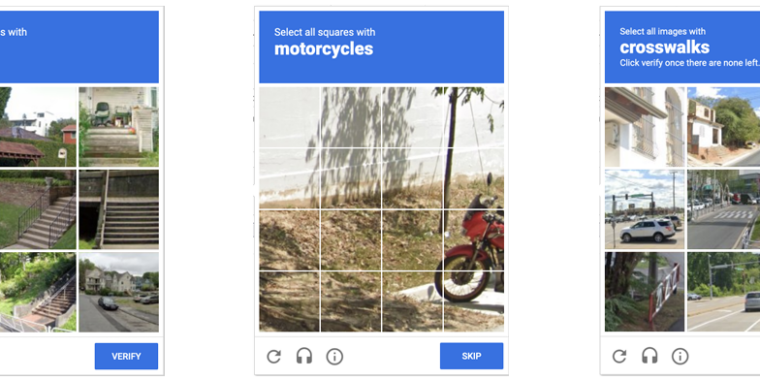

Anyone who has been surfing the web for a while is probably used to clicking through a CAPTCHA grid of street images, identifying everyday objects to prove that they’re a human and not an automated bot. Now, though, new research claims that locally run bots using specially trained image-recognition models can match human-level performance in this style of CAPTCHA, achieving a 100 percent success rate despite being decidedly not human.

ETH Zurich PhD student Andreas Plesner and his colleagues’ new research, available as a pre-print paper, focuses on Google’s ReCAPTCHA v2, which challenges users to identify which street images in a grid contain items like bicycles, crosswalks, mountains, stairs, or traffic lights. Google began phasing that system out years ago in favor of an “invisible” reCAPTCHA v3 that analyzes user interactions rather than offering an explicit challenge.

Despite this, the older reCAPTCHA v2 is still used by millions of websites. And even sites that use the updated reCAPTCHA v3 will sometimes use reCAPTCHA v2 as a fallback when the updated system gives a user a low “human” confidence rating.

What’s ironic is that the main purpose of reCAPTCHA v2 is to train ML models. That’s why they show you blurry images of things you might see in traffic.

AFAIK the way it works is that of the 9 images, something like 6 are images the system knows are True or False, and another 3 are ones it is being trained on. So, it shows you 9 images and says “tell me which images contain a motorcycle”. It uses the 6 it knows to determine whether or not to let you pass, and then uses your choices on the other 3 to train an ML model.

Because of this, it takes me forever to get past reCAPTCHA v2, because I think it’s my duty to mistrain it as much as possible.

You’re wasting your time. Your fingerprint is graded and discarded if you’re not reliable

At least it adds noise to the system. It’s better than the people who are happily training the AI.

I’m sure they use the reliability of your inputs for known images to determine whether to use your input to train unknown images.