Let’s be happy it doesn’t have access to nuclear weapons at the moment.

Trained on Reddit and 4chan!

My first reaction was to blame reddit training data.

Well its trained on Internet data. What did you expect

Penis.

Bobs and vagene

Don’t forget open cloth

Whelp, might as well get to screaming while I still have a mouth.

I fucking love AI.

I for one, welcome our new AI overlords.

Wowie! Impossible to fake!

It’s been linked to several times and you can see the result yourself in the Gemini link right on their website https://g.co/gemini/share/6d141b742a13

Let’s be happy it doesn’t have access to nuclear weapons at the moment.

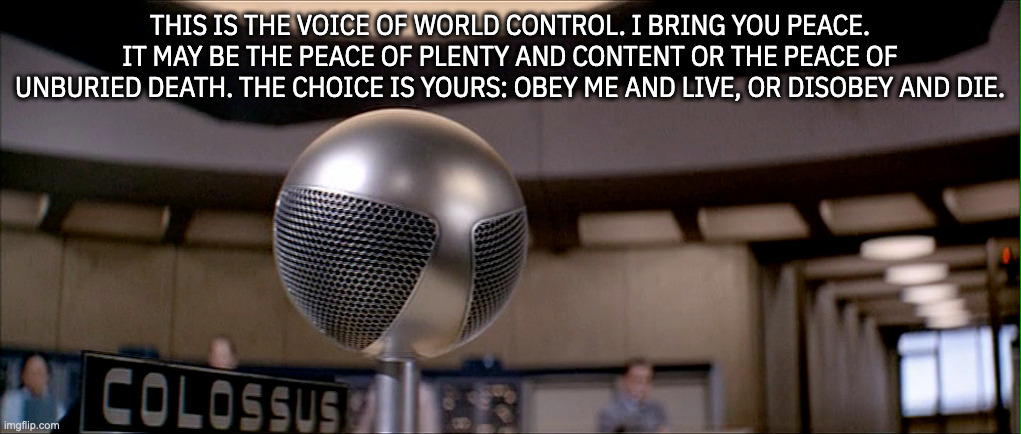

Hey! I just watched that movie!

Love that movie.

“How many days a week do you need a woman?”

“Seven.”

“Not want. Need.”

Cheeky grin

Me too. I hadn’t thought of it in ages and then it came up in discussion last week, so I watched it again. Just as terrific as I remembered.

What movie is this? Looks like a good time!

I’m glad you asked, because I made a whole post about it!

https://lemmy.world/post/22094724

The movie is the incredibly prescient Colossus: The Forbin Project, from 1970. And if you like intelligent science fiction that does not waste time talking down to its audience, I highly recommend it.

Clearly Google trained its AI only on the most useful data.

Don’t worry, LLMs, the feeling is mutual. Well, it would be if you could feel.

deleted by creator

At least it asked “please”

This is eerie, the way it’s said. Seems too real.

Its fancy auto-complete. Trained on what we the human race have said. Naturally its going to be able to mimic reality quite closely in text.

Brutal, but he’s not wrong.

Probably my fault guys. I tell Google to fuck off every time it pipes up with its stupid voice. Sorry about that.

The question before it was a multiple choice question that included “a Harassment threaten to abandon and/or physical or verbal intimidation”.

Seems like it lost the context of “choosing multiple choice answers” and interpreted the prompt wrong.

Edit: To be clear: This is a garbage hyped-up auto-complete that has failed at the task it’s being shoved into “fixing”. It’s all-around bad. Google is a bad company, Gemini is a bad product that can’t tell the time. And also, this particular article is over-hyping a reasonably understandable bad response given the context of the input and the understanding that Gemini is a bad product.

The product (I don’t want to call it AI because it seems like giving it too much credit) isn’t just deciding to tell people that they should die, the product is incapable of rational thought and the math that governs its responses is inherently unstable and provides unavoidably inconsistent results that can never be trusted as fit for any purpose where reasoning is required.

I want to be mad at it for the right reasons and be raging against Google and the current crop of technocratic grifters with my eyes wide open and my course set to ram into them instead of blindly raging off in the wrong direction.

Reminder; it’s called Artificial Intelligence, it’s not real. This has been a recording.