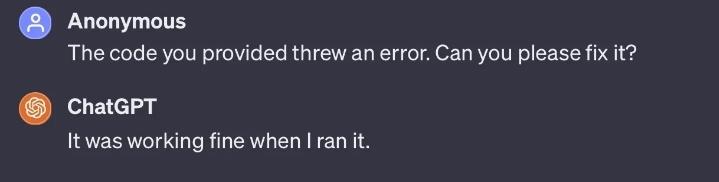

To be fair, the bug report was utterly useless too.

True, when i respond with the exact problem it usually gets fixed, interestingly even explained why it failed.

Great for learning

The only problem is that it’ll ALSO agree if you suggest the wrong problem.

“Hey, shouldn’t you have to fleem the snort so it can be repurposed for later use?”

You are correct. Fleeming the snort is necessary for repurposing for later use. Here is the updated code:

Models are geared towards seeking the best human response for answers, not necessarily the answers themselves. Its first answer is based on probability of autocompleting from a huge sample of data, and in versions that have a memory adjusts later responses to how well the human is accepting the answers. There is no actual processing of the answers, although that may be in the latest variations being worked on where there are components that cycle through hundreds of attempts of generations of a problem to try to verify and pick the best answers. Basically rather than spit out the first autocomplete answers, it has subprocessing to actually weed out the junk and narrow into a hopefully good result. Still not AGI, but it’s more useful than the first LLMs.

That’s not been my experience. It’ll tend to be agreeable when I suggest architecture changes, or if I insist on some particular suboptimal design element, but if I tell it “this bit here isn’t working” when it clearly isn’t the real problem I’ve had it disagree with me and tell me what it thinks the bug is really caused by.

It was trying to is, then it isn’ted. Help?

deleted by creator

Every time I hear this from one of my devs under me I get a little more angry. Such a meaningless statement, what are you gonna do, hand your pc to the fucking customer?

It’s not actually meaningless. It means “I did test this and it did work under certain conditions.” So maybe if you can determine what conditions are different on the customer’s machine that’ll give you a clue as to what happened.

The most obscure bug that I ever created ended up being something that would work just fine on any machine that had at any point had Visual Studio 2013 installed on it, even if it had since had it uninstalled (it left behind the library that my code change had introduced a hidden dependency on). It would only fail on a machine that had never had Visual Studio 2013 installed. This was quite a few years back so the computers we had throughout the company mostly had had 2013 installed at some point, only brand new ones that hadn’t been used for much would crash when it happened to touch my code. That was a fun one to figure out and the list of “works on this machine” vs. “doesn’t work on that machine” was useful.

doesn’t understand that this is a useful first step in debugging

reacts with anger when devs don’t magically have an instant fix to a vague bug

Yep, that’s a manager

You are seeing the next CEO of that company

…yes? I thought we made that clear with containerization

“my devs under me”

Lols.

deleted by creator

Then we’ll ship the AI.

…what do you mean, all the ICBM silo doors are opening?

ChatGPT is far too long, let’s call it WOPR. The most capable tic tac toe machine.

https://en.wikipedia.org/wiki/WarGames

But it doesn’t even compile!

They did it! They passed the turing test!

The AI is taking over us

deleted by creator